Beyond the Basics: What You Can Do With Custom Drone Applications

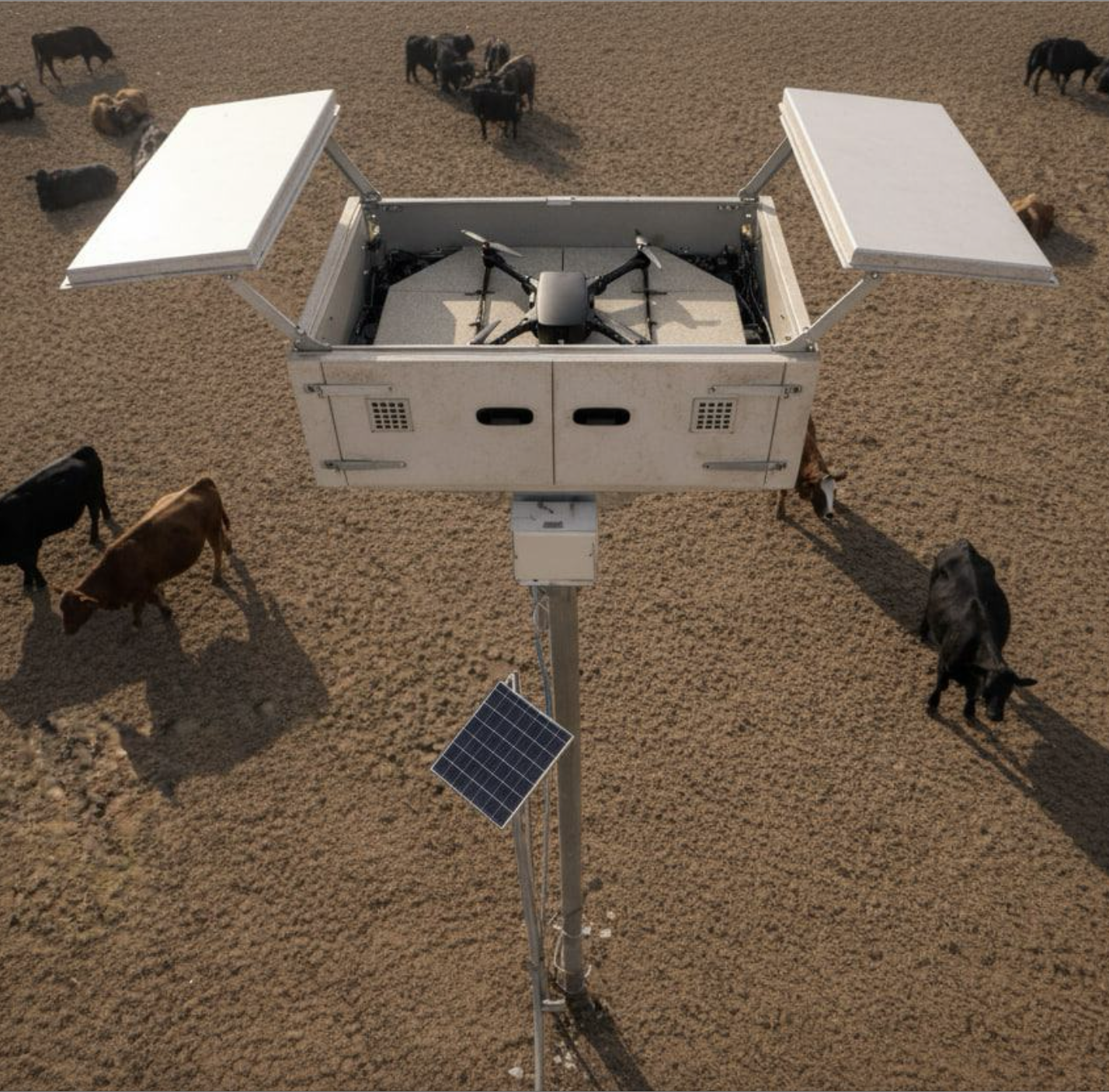

Beyond hardware, drones are becoming software-defined machines. What matters isn’t only the sensors you bolt on or the airframe you pick, it’s the drone applications you can run at the edge and connect back to the cloud. From real-time mapping to AI-driven inspections, today’s UAVs can already host a growing library of mission-specific apps.

In this post, we’ll look at four categories of drone applications you can deploy right now: advanced navigation and control, AI and computer vision, onboard data processing and mapping, and multi-sensor fusion with specialized payloads.

Advanced Navigation & Control

Using edge-deployed drone navigation apps, drones can maintain altitude better, gain higher situational awareness, and dynamically adjust to variable conditions as a result. Modern drone autopilots come pre-furnished with features like autonomous waypoint planning, automatic takeoff and landing, GPS-denied flight, collision avoidance, and multi-drone swarming, among others — all making the flight experience smoother.

For instance, Skydio drones have an “AI Core”, based on an NVIDIA-based edge computer and six computer vision cameras. Combined with an onboard algorithm, the setup allows Skydio drones to build a continuous 360-degree map of the surroundings, recognize objects, and calculate motion plans on the spot. This autonomy system (fully on-board) allows even non-expert pilots to perform complex maneuvers (e.g,. indoor inspections, following moving subjects) reliably.

While Skydio’s software is proprietary and fit for its platform, you can create similar drone navigation solutions with open-source components and deploy ‘em on your hardware with Osiris AI.

Sample drone applications:

- Autonomous waypoint navigation

- Obstacle avoidance & collision prevention

- GPS-denied navigation

- Autonomous takeoff/landing/RTL

- Drone swarming scenarios

AI & Computer Vision

AI and computer vision, in particular, extend UAVs’ sensing capabilities. You can run pre-trained models on-device to enable better object recognition and tracing, surface classifications, or anomaly detection. Effectively, you’re moving past data collection and towards on-the-fly (literary) situational awareness and decision-making.

For instance, Percepto recently launched an AI-powered Emission Detector for drones. The application analyzes captured thermal and optical footage to detect methane leaks. When it flags an anomaly, the operator receives an instant geotagged alert. And that’s just one drone application, AI enables.

Sample drone applications:

- Object recognition & tracking

- AI-assisted industrial inspections

- Structural scanning & photogrammetry

- Sensor-based anomaly detection

Onboard Data Processing & Mapping

Onboard data processing enables more effective mapping and drone surveying missions. Instead of waiting hours for imagery to be uploaded and processed in the cloud, UAVs can now fuse sensor data and generate usable outputs as part of the mission itself. For instance, provide real-time LiDAR previews or PPK corrections for survey accuracy mid-flight.

DJI Zenmuse L1 LiDAR, mounted on the M300, can generate real-time 3D point clouds onboard and perform interactive preview actions (e.g., rotate, zoom, recenter) via DJI’s Pilot app. Similarly, AgEagle offers a companion app for its eBeex VTOL that allows it to collect data with geotagged images during flight and then prepares all visuals for post-processing.

Sample drone applications:

- Real-time 3D LiDAR previews

- Onboard NDVI/agriculture analysis

- PPK geotagging for surveys

- Real-time SLAM mapping

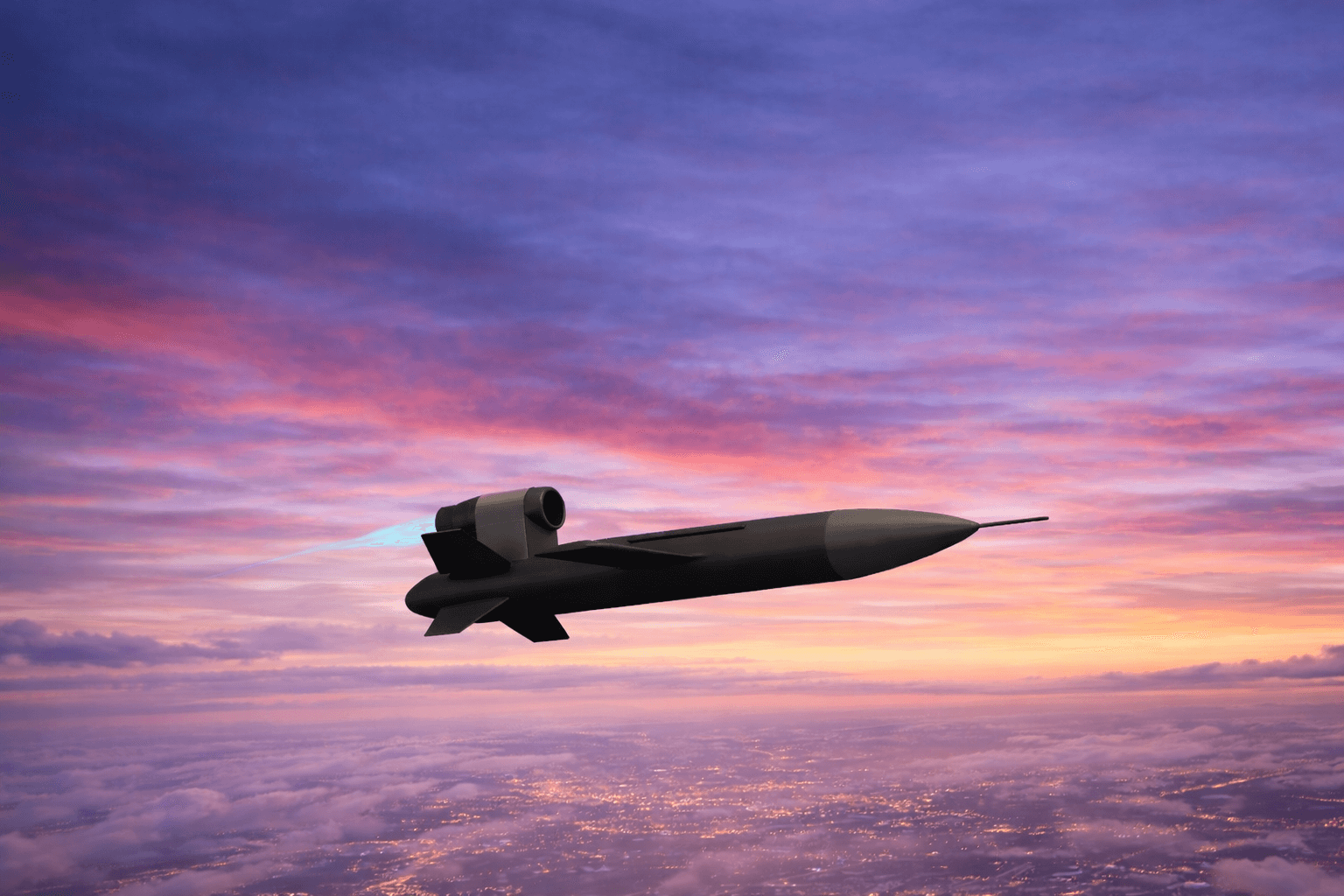

Onboard Data Fusion & EW

Thanks to onboard data fusion, drones can get advanced capabilities for intelligence gathering — from classifying tree species for a research mission or tracking RF emitters on a battlefield. The cool part? The same onboard computer can be configured to integrate LiDAR, optical, and thermal data for scientific research, as well as drive electronic warfare payloads where speed is critical.

A recent example of the above is the MUSCLES payload tested by the US Marine Corps on an MQ-9. The kit detects hostile radar or communication signals and immediately decides whether to jam or spoof them. All waveform recognition and jamming logic runs on the payload computer onboard, removing the latency of waiting for human approval. This capability illustrates the future of edge-deployed drone applications: autonomy not just in flight, but in how sensors and countermeasures respond to dynamic environments.

Sample drone applications:

- Multi-sensor fusion

- Real-time RF geolocation and triangulation

- Electronic warfare & jamming payloads

- Scientific edge analytics (e.g., tree classification, water sampling drones)

Takeaways

Drone performance is no longer defined by hardware alone. What matters equally is the software running on the edge. Navigation apps turn any pilot into an expert. Computer vision models spot defects before they become failures. Onboard mapping compresses survey timelines from days to hours. Data fusion and electronic warfare enable unprecedented research and counter-maneuvers.

The next frontier of UAV innovation is not new airframes, but the applications you deploy on them. To accelerate that future, explore Osiris AI — a drone operating system and toolkit designed for building and running modular applications directly at the edge.